The Apache Hop team released Apache Hop 2.3.0 yesterday.

Run Apache Hop at its full potential with the support and know-how from experts since day one.

Do you need a new or customized plugin for Apache Hop that isn't on the community's radar?

We build and customize plugins for you, even entirely customized Hop builds are an option.

Hop is a broad and deep platform that takes knowledge and experience to deliver ideal results.

Our classroom and e-learning platforms get you up to date on knowledge. In a series of coaching and audit sessions, we learn data teams how to work according to best practices for optimal results.

Your mission-critical Apache Hop project can't be at risk. All systems fail sooner or later, our support desk is ready to help when that happens.

We take support seriously: we want to support you along your entire project's life cycle, with training, coaching, and audit sessions included.

Apache Hop is a perfect choice for all your data integration and data orchestration needs, but especially excels in a number of architectures and use cases.

Graphs are taking the world by storm with market leader Neo4j at the forefront. Graph data models and queries are extremely powerful and open up entirely new worlds of analyzing your data. However, reliable, repeatable, and scalable graph data loading often is not a trivial task.

Apache Hop's unparalleled graph data loading and querying functionalities put Hop in pole position for all your graph data loading needs.

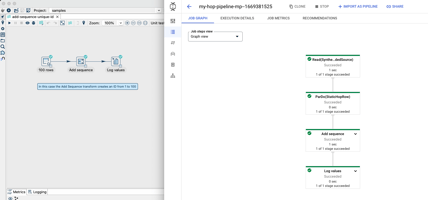

Large-scale distributed data processing platforms like Apache Spark, Apache Flink, and Google Dataflow make processing huge amounts of data possible. Apache Beam offers a unified programming model to define ETL, batch and stream pipelines on any of these platforms.

Where Apache Beam makes creating a unified code base on all major distributed platforms possible, Apache Hop makes it easy. Hop's visual pipeline development, unit testing and project life cycle support allow data project teams to write pipelines once and run them where it makes most sense.

Apache Hop started its journey in 2019 as a fork of Kettle, the open source project behind Pentaho Data Integration.

Apache Hop and Kettle are different, independent and incompatible projects, this shared history allows Hop to import Kettle projects.

This upgrade not only converts Kettle jobs and transformations to Hop workflows and pipelines, but also gives access to

Check our blog for the latest news in Apache Hop, release previews and behind-the-scenes information

The Apache Hop team released Apache Hop 2.3.0 yesterday.

Google Cloud Dataflow is a Unified stream and batch data processing engine that...

The Apache Hop community just released Apache Hop 2.2.0, the fifth (!!) and final release of 2022,

Leave your contact details here and we'll be in touch.